Human-Machine Capital: HRBP’s Guide to Cultivating Collaborative Intelligence in the Workplace Knowledge Management

- Judy

- 3 hours ago

- 12 min read

In today's world swept by the digital wave, enterprise knowledge management (KM) is undergoing the most profound transformation since the invention of the computer. From early paper filing cabinets to today's generative AI platforms, the evolution of knowledge management has always resonated with technological innovation. However, the current revolution triggered by large language models (LLMs) and retrieval-augmented generation (RAG) technologies has gone far beyond tool-level iteration—it is reconstructing the relationships between humans and knowledge, humans and machines, upgrading knowledge management from a single function of "information storage and retrieval" to a core strategy of "human-machine collaborative value creation." This article systematically analyzes the underlying logic of this transformation, profoundly explains how prompt engineering and context management have become the pillars of knowledge management in the new era, and reveals the fundamental role of security governance in human-machine collaboration, ultimately providing enterprises with a complete intelligent knowledge management practice framework.

I. Three Evolutions of Knowledge Management: From Static Storage to Dynamic Symbiosis

The history of knowledge management is essentially a continuous exploration of the proposition "how to efficiently acquire, precipitate, and apply knowledge." Each technological breakthrough has driven a paradigm shift in knowledge management, and the emergence of generative AI marks the entry of this field into a new "human-machine symbiosis" stage.

(I) KM 1.0: Document-based Physical Management (1990-2010)

In the 1990s, with the popularization of computer and network technologies, enterprise knowledge management was freed from the constraints of physical carriers for the first time. The core feature of this stage was "digital storage," which converted paper documents, manuals, reports, etc., into electronic files, achieving centralized management through file servers, ERP systems, or early knowledge bases (such as Lotus Notes). For example, IBM's global knowledge base built in the early 2000s digitized R&D documents distributed in 40 countries, allowing employees to access basic information through keyword searches, and improving cross-departmental knowledge sharing efficiency by approximately 30%.

However, KM 1.0 had insurmountable limitations:

Static nature: Once knowledge was entered into the system, it was rarely updated. A manufacturing enterprise's equipment maintenance manual even still used the 2005 version, causing new employees to operate according to outdated procedures and leading to failures;

Fragmentation: Documents from different departments had inconsistent formats and chaotic naming conventions. The phenomenon that "the same policy is called 'expense reimbursement norms' in the finance department and 'travel expense standards' in the administrative department" was common;

Passivity: Knowledge required active retrieval by employees and relied on precise keyword matching. A retail store manager once spent 3 hours finding relevant documents because he did not know the official name of the "promotion approval process."

Knowledge management at this stage was more like a "digital archive," only solving the problem of "where to store knowledge" but failing to answer "how to make knowledge actively serve business."

(II) KM 2.0: Search-based Information Management (2010-2020)

With the maturity of big data and search engine technologies, knowledge management entered the era of "search as a service." The popularization of tools such as Elasticsearch and Solr enabled enterprises to improve knowledge acquisition efficiency through full-text retrieval, tag classification, and associated recommendations. Platforms like Guru and Confluence encapsulated knowledge into "cards" to support collaborative editing by employees. The technical team of an internet enterprise associated "microservice architecture-fault troubleshooting-code examples" through Confluence, accelerating new employees' problem-solving speed by 50%.

The progress of KM 2.0 was obvious, but it still failed to break through three bottlenecks:

Data silos: Knowledge in business systems (ERP/CRM), communication tools (emails/Teams), and document libraries were isolated from each other. The sales team of an automobile company had to switch between 3 systems to obtain complete customer information;

Semantic discontinuity: Search engines could not understand contextual associations, such as failing to recognize the logical relationship between "procurement processes" and "supplier evaluation." A procurement specialist in a fast-moving consumer goods company mistakenly selected a partner because he could not find the "supplier qualification review standards";

Scenario detachment: Knowledge was separated from business scenarios. Although a bank's compliance manual was detailed, customer managers still had to manually search for "wealth management product risk reminder scripts" when communicating with customers, affecting service experience.

Knowledge management at this stage still remained at the level of an "efficiency tool" and failed to touch the core of "knowledge creating value."

(III) KM 3.0: Intelligent Management Based on Generative AI for Knowledge Management (2023-present)

The breakthrough progress of generative AI and large language models has completely reconstructed the underlying logic of knowledge management. With RAG technology as the core, knowledge management has shifted from "passive response to retrieval" to "active generation of services," presenting three revolutionary characteristics:

Context understanding: AI no longer relies on keyword matching but parses semantics, scenarios, and user intentions through deep learning. For example, LyndonAI's Fusion platform, when processing the query "Q3 revenue decline," can automatically associate market competition data, supply chain delay records, and internal process vulnerability reports to generate multi-dimensional analysis;

Knowledge generation: Creating new content based on enterprise private data. A consultant from a consulting company, by inputting customer industry reports and historical cases into the Kora system, the AI automatically generated a customized proposal framework including "problem diagnosis-solutions-implementation steps," reducing the first draft completion time from 5 days to 4 hours;

Human-machine collaboration: AI undertakes mechanical tasks such as knowledge filtering, association, and generation, while humans focus on high-level tasks such as creativity and decision-making. In the R&D team of a medical device enterprise, AI is responsible for sorting out literature and analyzing experimental data, while engineers concentrate on technological innovation, shortening the new product development cycle by 30%.

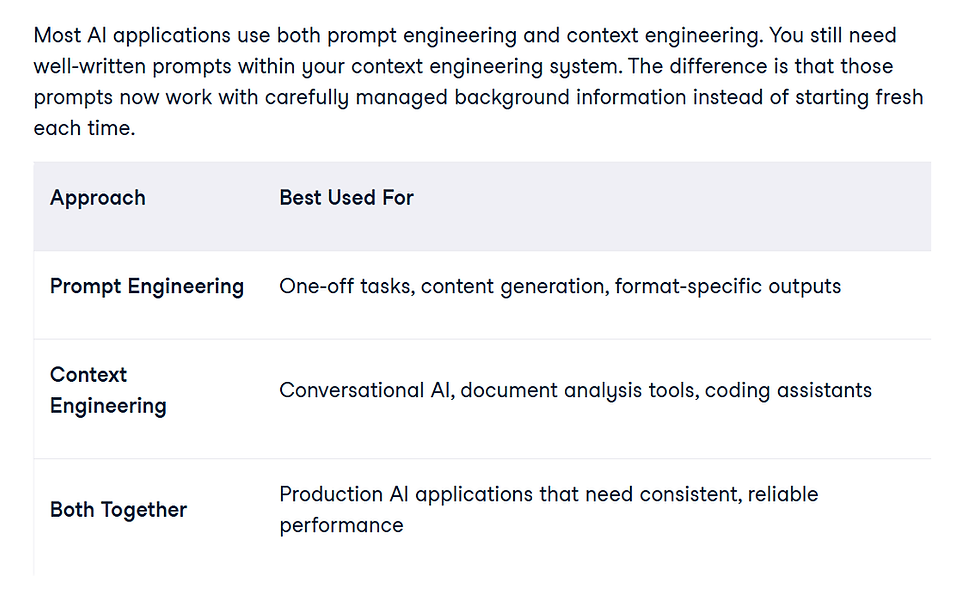

The essence of KM 3.0 is an "intelligent ecosystem," and Prompt Engineering and Context Management are the "dual engines" driving this ecosystem— the former defines the communication rules between humans and AI, while the latter builds the "cognitive foundation" for AI to understand enterprises. Together, they support the full-chain value release of knowledge from "precipitation" to "creation."

II. Prompt Engineering: Redefining the "Language Protocol" for Human-Machine Collaboration is key part of Enterprise KM

In the era of generative AI, "how to accurately express needs to AI" has become a key proposition in knowledge management. Prompt engineering enables AI to output results more suitable for business scenarios by optimizing the structure, parameters, and context of instructions. It is not only a technical means but also a "translation layer" and "protocol specification" for human-machine collaboration.

(I) The Essence of Prompt Engineering: From "Instruction Transmission" to "Intent Co-creation"

A prompt is a bridge for interaction between users and AI, but prompts in enterprise-level scenarios are by no means simple "questions" but "structured instruction sets" containing role settings, task objectives, data constraints, output formats, and other elements. For example:

Basic prompt: "List the top 3 customer complaints in Shanghai in Q4 2023" — only factual data can be obtained;

Enhanced prompt: "Analyze the reasons for the top 3 customer complaints in Shanghai in Q4 2023 combined with industry trends and propose improvement suggestions" — triggering AI's analysis and decision-making capabilities;

Situational prompt: "Assume you are the service manager of a certain automobile brand in Shanghai. Based on nearly three years of complaint data and competitor dynamics, design a 2024 customer experience improvement plan that includes budget allocation and KPI setting" — endowing AI with roles, data, and goals to generate implementable business plans.

The core value of enterprise-level prompt engineering lies in narrowing the gap between "user intent" and "AI understanding". A test by a financial enterprise showed that unoptimized prompts led to a 42% deviation rate between AI-generated loan approval suggestions and actual business needs, while after calibration through prompt engineering, the deviation rate dropped to 8%.

(II) Three-Dimensional Architecture of Enterprise-Level Prompt Engineering

1. Role Dimension: Customized Prompt Template Library

Business needs vary significantly across different positions, and general prompts are difficult to meet professional requirements. Prompt engineering needs to design exclusive templates according to roles, such as:

HR scenario: Recruiters use prompts like "Please generate 5 interview questions based on candidate A's resume (Annex 1) and job JD (Annex 2), focusing on 'cross-departmental collaboration ability'. Each question should be marked with evaluation dimensions (such as communication skills/conflict resolution)";

Sales scenario: Account managers input "Generate 3 sets of negotiation strategies based on customer B's procurement history (Annex 3), competitor quotations (Annex 4), and this quarter's promotion policy (Annex 5). Each set should include 3 concession points and 2 pressure points, and estimate the transaction probability";

Technical scenario: Engineers input "Analyze system error logs (Annex 6), classify possible causes by 'hardware failure/software vulnerability/configuration error', sort by probability, and provide troubleshooting steps, prioritizing non-downtime solutions".

LyndonAI's VibeChat platform has built-in 120+ industry templates, supporting non-technical personnel to quickly generate professional prompts by dragging components (such as data sources, output formats, role settings). A workshop director of a manufacturing enterprise improved the efficiency of equipment repair order generation by 60% through this tool.

2. Task Dimension: Structured Prompt Process Chain

Complex businesses need to be disassembled into multi-step prompt sequences to ensure that AI outputs conform to logical progression. For example, the prompt chain for the "market research" scenario:

① "Extract keywords of user pain points in new energy vehicles in 2023 from the industry report (Annex 7), sorted by frequency of occurrence";

② "Associate enterprise CRM data (data source 8) to analyze the specific performance of these pain points among users of our brand (such as complaint volume/satisfaction score)";

③ "Combine competitor solutions (Annex 9) and the enterprise's technical reserves (Annex 10) to generate 3 sets of product improvement suggestions, including function priority and R&D cycle estimation".

This "step-by-step guidance" model avoids the decline in output quality caused by AI information overload. The new product planning scheme generated by a home appliance enterprise through the prompt chain saw its adoption rate increase from 35% to 72%.

3. Data Dimension: Dynamic Prompt Calibration Mechanism

General large models have limitations in understanding specific enterprise businesses and need to improve accuracy through dynamic calibration:

Industry terminology calibration: In medical enterprises, prompt models need to distinguish professional differences between "antibiotic resistance" and "drug tolerance" to avoid confusion;

Process rule calibration: In manufacturing, prompts need to embed unique rules such as "quality inspection before storage" and "batch traceability takes precedence over efficiency";

Cultural context calibration: In multinational enterprises, prompts need to adapt to business habits in different regions. For example, prompts for the Japanese market need to include considerations of the "nemawashi (pre-consultation)" culture.

Through dynamic calibration, a multinational logistics enterprise increased the compliance rate of AI-generated international freight plans in different regions from 68% to 95%.

(III) Technical Implementation and Effect Evaluation of Prompt Engineering

Enterprise-level prompt engineering relies on systematic tools and quantitative indicators:

Low-code prompt building platform: LyndonAI's Optima Bot provides a visual editor, supporting business personnel to configure prompt logic without programming. A regional manager of a retail enterprise completed the design of the "promotion effect analysis" prompt within 30 minutes through this tool;

Three-dimensional evaluation system: Quantifying prompt quality through "accuracy (factual error rate), relevance (matching degree with business goals), and operability (clarity of implementation steps)". A bank increased the adoption rate of AI suggestions from 58% to 89% by optimizing loan approval prompts;

Version management mechanism: Controlling versions of prompts for key scenarios such as financial auditing and contract review, recording modifiers, time, and reasons to ensure compliance and traceability. A law firm successfully 应对 3 regulatory audits through prompt version management.

III. Context Management: Building a "Cognitive Map" for AI to Understand Enterprises

If prompt engineering is the "language of human-machine communication," then context management is the "worldview for AI to understand enterprises." It builds an exclusive "business cognitive framework" for AI by integrating internal and external enterprise information, solving the core pain point that "general large models do not understand specific enterprise businesses."

(I) Dual Attributes of Context: From Explicit Data to Tacit Knowledge

Context is the sum of background information for AI to understand business, including two dimensions:

Explicit context: Structured information, such as organizational structure (department settings, reporting relationships), business processes (procurement approval nodes, sales funnel stages), industry standards (ISO quality system, GDPR compliance requirements), historical data (performance reports of the past three years, customer complaint records);

Tacit context: Knowledge that is difficult to quantify, such as corporate culture (innovation-oriented vs. risk-averse), decision-making logic (market priority vs. cost priority), customer relationships (long-term cooperation vs. one-time transactions), employee experience (senior sales negotiation skills, engineers' fault intuition).

For example, in the "Double 11 promotion" scenario of a retail enterprise, explicit context includes promotion time nodes, inventory data, and historical sales volume; tacit context includes empirical rules such as "30% of inventory should be reserved during the pre-sale period to cope with the peak period" and "focus on maintaining the price sensitivity of VIP customers." General AI can only provide suggestions based on explicit data, while LyndonAI, after context management, can integrate tacit rules, increasing the accuracy of promotion stock preparation by 40%.

(II) Four Pillars of Context Management

1. Data Layer: Breaking Silos to Build a Global Knowledge Network

Enterprise knowledge is scattered across multiple systems, and the first step in context management is to achieve "data interconnection":

Multi-source integration: Connecting ERP (financial data), CRM (customer data), HR systems (employee information), Wiki (process documents), Teams (chat records), etc., through LyndonAI's Fusion platform to form a "business-data-knowledge" association network. After integrating 12 systems, a group enterprise reduced the knowledge search time from an average of 2 hours to 8 minutes;

Dynamic injection: Real-time synchronization of business data (such as inventory changes, market public opinion, production progress) to ensure that AI decisions are based on the latest information. A logistics enterprise injected real-time road condition data into the route optimization model, improving delivery efficiency by 15%;

Unstructured processing: Parsing unstructured data such as contract scans, meeting recordings, and handwritten notes through OCR, speech-to-text, and other technologies. A law firm converted 500,000 pages of paper cases into searchable text, increasing case reuse rate by 65%.

2. Semantic Layer: Building an Enterprise-Level Knowledge Graph

Knowledge graph is the "nerve center" of context, revealing associations between knowledge through entity recognition and relationship modeling:

Entity definition: Clarifying core enterprise entities (such as "products," "customers," "suppliers") and their attributes (products include "model," "price," "warranty period");

Relationship modeling: Identifying logical associations between entities, such as the causal chain of "supplier-purchase order-quality inspection report-payment record." A manufacturing enterprise discovered through the knowledge graph that a supplier's delivery risk due to delayed quality inspection reports and promptly replaced the supplier to avoid production line shutdowns;

Terminology standardization: Establishing a unified "semantic dictionary" to solve the problem of "polysemy." For example, unifying "SKU," "material code," and "commodity number" into "inventory unit code," a retail enterprise eliminated terminology ambiguities in cross-departmental communication.

3. Scenario Layer: Precipitating Industry Know-How

Context needs to be deeply bound to business scenarios to be valuable:

Digitization of expert experience: Transforming tacit experience into AI-learnable rules through interviews, workshops, etc. A hospital disassembled senior doctors' "diagnostic thinking for difficult cases" into a decision tree of "symptoms-examination items-differential diagnosis" and converted it into a prompt template, improving the diagnostic accuracy of young doctors by 30%;

Scenario-based encapsulation: Predefining context parameters for high-frequency scenarios (such as quarterly assessment, new product launch, crisis public relations). A fast-moving consumer goods enterprise encapsulated "market trends + competitor analysis + historical cases" context for the "new product launch" scenario, increasing the adoption rate of AI-generated marketing plans from 42% to 78%;

Dynamic adaptation: Adjusting scenario parameters according to business changes. After the popularization of remote work, a technology enterprise updated the context of the "team collaboration" scenario, adding new dimensions such as "asynchronous communication response time" and "online meeting participation evaluation."

4. Permission Layer: Ensuring Context Security and Controllability

Context contains a large amount of sensitive information, and permission management is a prerequisite for safe use:

Classification and grading: Classifying context into four levels of "public-internal-confidential-core confidential" with reference to the "Data Security Law." For example, product manuals are "public level," customer credit ratings are "confidential level," and core technical parameters are "core confidential level";

Principle of least privilege: Assigning access permissions according to job requirements, such as HRBPs can view department performance data, while ordinary employees can only access general policies. An enterprise reduced unauthorized access to sensitive information by 90% through permission control;

Data desensitization: Blurring personal information (salary, ID number) and trade secrets (cost data, negotiation bottom line) while retaining business characteristics. A financial enterprise realized cross-departmental training of risk control models without privacy through desensitization processing.

IV. Security Governance: The "Immune System" of Human-Machine Collaboration

While the "creativity" of generative AI improves efficiency, it also brings new risks—incorrect prompts may lead to decision biases, leakage of sensitive context may trigger compliance crises, and algorithmic biases may damage enterprise reputation. Therefore, the security governance of prompt engineering and context management has become an indispensable core link in knowledge management.

About Cyberwisdom Group

Cyberwisdom Group is a global leader in Enterprise Artificial Intelligence, Digital Learning Solutions, and Continuing Professional Development (CPD) management, supported by a team of over 300 professionals worldwide. Our integrated ecosystem of platforms, content, technologies, and methodologies delivers cutting-edge solutions, including:

wizBank: An award-winning Learning Management System (LMS)

LyndonAI: Enterprise Knowledge and AI-driven management platform

Bespoke e-Learning Courseware: Tailored digital learning experiences

Digital Workforce Solutions: Business process outsourcing and optimization

Origin Big Data: Enterprise Data engineering

Trusted by over 1,000 enterprise clients and CPD authorities globally, our solutions empower more than 10 million users with intelligent learning and knowledge management.

In 2022, Cyberwisdom expanded its capabilities with the establishment of Deep Enterprise AI Application Designand strategic investment in Origin Big Data Corporation, strengthening our data engineering and AI development expertise. Our AI consulting team helps organizations harness the power of analytics, automation, and artificial intelligence to unlock actionable insights, streamline processes, and redefine business workflows.

We partner with enterprises to demystify AI, assess risks and opportunities, and develop scalable strategies that integrate intelligent automation—transforming operations and driving innovation in the digital age.

Vision of Cyberwisdom

"Infinite Possibilities for Human-Machine Capital"

We are committed to advancing Corporate AI, Human & AI Development

Kommentare